1 - Communicating with Кanto-CM via gRPC

Kanto container management binds to a unix socket (default: /run/container-management/container-management.sock) and exposes a gRPC interface which can be used to obtain all the functionality of the kanto-cm cli programatically.

The easiest way to access this API through Rust is by creating a new Rust crate:

$ cargo new talk-to-kanto

Dependencies

The most feature-rich gRPC library for Rust right now is tonic. Add the following to your Cargo.toml to make tonic and the tokio async runtime available to your crate. Tower and hyper are needed to be able to bind to the unix socket.

[dependencies]

prost = "0.11"

tokio = { version = "1.0", features = [ "rt-multi-thread", "time", "fs", "macros", "net",] }

tokio-stream = { version = "0.1", features = ["net"] }

tonic = {version = "0.8.2" }

tower = { version = "0.4" }

http = "0.2"

hyper = { version = "0.14", features = ["full"] }

serde = { version = "1.0.147", features = ["derive"] }

serde_json = { version = "1.0.89", default-features = false, features = ["alloc"] }

[build-dependencies]

tonic-build = "0.8.2"

Compiling protobufs

The easiest way to obtain the kanto-cm .proto files is to add the container management repo in your project root as a git submodule:

$ git submodule init

$ git submodule add https://github.com/eclipse-kanto/container-management.git

$ git submodule update --init --recursive

You should now have the container-management repository available.

To build the .proto files during compile time, define a custom build.rs in the project root

$ touch build.rs

Add the following main function to the build.rs:

fn main() -> Result<(), Box<dyn std::error::Error>> {

tonic_build::configure()

.build_server(false)

.include_file("mod.rs")

.type_attribute(".", "#[derive(serde::Serialize, serde::Deserialize)]")

.compile(

&["api/services/containers/containers.proto"],

&["container-management/containerm/"],

)?;

Ok(())

}

Here it is important to know that tonic does not like deeply nested protobufs such as those for kanto-cm. That is why the line .include_file("mod.rs") re-exports everything in a seperate module which can later be included in the main.rs file.

"#[derive(serde::Serialize, serde::Deserialize)]" makes all structures (de-)serializable via serde.

Importing generated Rust modules

Now in src/main.rs add the following to import the generated Rust modules:

pub mod cm {

tonic::include_proto!("mod");

}

use cm::github::com::eclipse_kanto::container_management::containerm::api::services::containers as cm_services;

use cm::github::com::eclipse_kanto::container_management::containerm::api::types::containers as cm_types;

Now all kanto-cm services as namespaced under cm_services.

Obtaining a unix socket channel

To obtain a unix socket channel:

use tokio::net::UnixStream;

use tonic::transport::{Endpoint, Uri};

use tower::service_fn;

let socket_path = "/run/container-management/container-management.sock";

let channel = Endpoint::try_from("http://[::]:50051")?

.connect_with_connector(service_fn(move |_: Uri| UnixStream::connect(socket_path)))

.await?;

This is a bit of a hack, because currently, tonic+tower don’t support binding directly to an unix socket. Thus in this case we attemp to make an http connection to a non-existent service on port 5051. When this fails, the fallback method connect_with_connector() is called where a tokio UnixStream is returned and the communication channel is generated from that.

Making a simple gRPC call to kanto-cm

All that is left is to use the opened channel to issue a simple “list containers” request to kanto.

// Generate a CM client, that handles containers-related requests (see protobufs)

let mut client = cm_services::containers_client::ContainersClient::new(channel);

let request = tonic::Request::new(cm_services::ListContainersRequest {});

let response = client.list(request).await?;

Since we made all tonic-generated structures (de-)serializable we can use serde_json::to_string() to print the response as a json string.

println!("{}", serde_json::to_string(&response)?);

2 - Kanto Auto deployer (KAD)

TLDR: To deploy a container in the final Leda image, all you generally need to do is add the manifest in the kanto-containers directory and re-build.

Kanto-CM does not provide (currently) a stable feature that allows for the automatic deployment of containers through manifest files similar to k3s’ automated deployment of k8s-manifests found in the /var/lib/rancher/k3s/server/manifests directory.

This can be worked around via a bash script for each container that runs on boot and makes sure it’s deployed. Even though this approach is functional it is not very structured and would require a lot repeating code.

That is why the “Kanto Auto deployer” tool was developed. It directly implements the ideas in Communicating with Кanto-CM via gRPC.

The compiled binary takes a path to a directory containing the json manifests, parses them into Rust structures and sends gRPC requests to kanto container management to deploy these containers.

Manifest structure

Because Kanto CM uses different JSON formats for the interal state representation of the container (from the gRPC API) and for the deployment via the Container Management-native init_dir-mechanism, KAD supports both through the “manifests_parser” module.

The conversion between formats is automatic (logged as a warning when it’s attempted)

so you do not need to provide extra options when using one or the other.

Container Management Manifests Format

This is the CM-native format, described in the Kanto-CM documentation. It is the recommended format since KAD is supposed to be replaced by native CM deployment modules in the future and this manifest format will be compatible with that.

It also allows you to ommit options (defaults will be used). KAD will issue a log warning when the “manifests_parser” attempts to convert this manifest format to the gRPC message format (internal state representation).

Internal State Representation

The KAD “native” manifests format uses the exact same structure for its manifests as the internal representation of container state in kanto container management. This manifest format does not allow keys in the json to be ommited, so these manifests are generally larger/noisier. For example:

{

"id": "",

"name": "databroker",

"image": {

"name": "ghcr.io/eclipse/kuksa.val/databroker:0.2.5",

"decrypt_config": null

},

"host_name": "",

"domain_name": "",

"resolv_conf_path": "",

"hosts_path": "",

"hostname_path": "",

"mounts": [],

"hooks": [],

"host_config": {

"devices": [],

"network_mode": "bridge",

"privileged": false,

"restart_policy": {

"maximum_retry_count": 0,

"retry_timeout": 0,

"type": "unless-stopped"

},

"runtime": "io.containerd.runc.v2",

"extra_hosts": [],

"port_mappings": [

{

"protocol": "tcp",

"container_port": 55555,

"host_ip": "localhost",

"host_port": 30555,

"host_port_end": 30555

}

],

"log_config": {

"driver_config": {

"type": "json-file",

"max_files": 2,

"max_size": "1M",

"root_dir": ""

},

"mode_config": {

"mode": "blocking",

"max_buffer_size": ""

}

},

"resources": null

},

"io_config": {

"attach_stderr": false,

"attach_stdin": false,

"attach_stdout": false,

"open_stdin": false,

"stdin_once": false,

"tty": false

},

"config": {

"env": [

"RUST_LOG=info",

"vehicle_data_broker=debug"

],

"cmd": []

},

"network_settings": null,

"state": {

"pid": -1,

"started_at": "",

"error": "",

"exit_code": 0,

"finished_at": "",

"exited": false,

"dead": false,

"restarting": false,

"paused": false,

"running": false,

"status": "",

"oom_killed": false

},

"created": "",

"manually_stopped": false,

"restart_count": 0

}

The only difference to the actual internal state representation is that fields in the manifest can be left empty ("") if they are not important for the deployment. These values will be filled in with defaults by kanto-cm after deployment.

For example, you do not need to specify the container “id” in the manifest, as an unique uuid would be assigned automatically after deployment.

Container deployment in Leda

Kanto-auto-deployer can run as a one-shot util that goes through the manifest folder (default: /data/var/containers/manifests) and deploys required containers.

When you pass the --daemon flag it would enable the “filewatcher” module that would continuously monitor the provided path for changes/creation of manifests.

The Bitbake recipe for building and installing the auto deployer service can be found at kanto-auto-deployer_git.bb.

This recipe also takes all manifests in the kanto-containers directory and installs them in the directory specified by the KANTO_MANIFESTS_DIR BitBake variable (weak default: /var/containers/manifests).

Important: To deploy a container in the final Leda image, all you generally need to do is add the manifest in the kanto-containers directory and re-build.

Conditional compilation of the filewatcher module

To reduce binary bloat the --daemon option is namespaced under the filewatcher conditional compilation flag (enabled by default).

To compile KAD without the filewatcher module run: cargo build --release --no-default-features.

(Implemented in Leda Utils PR#35)

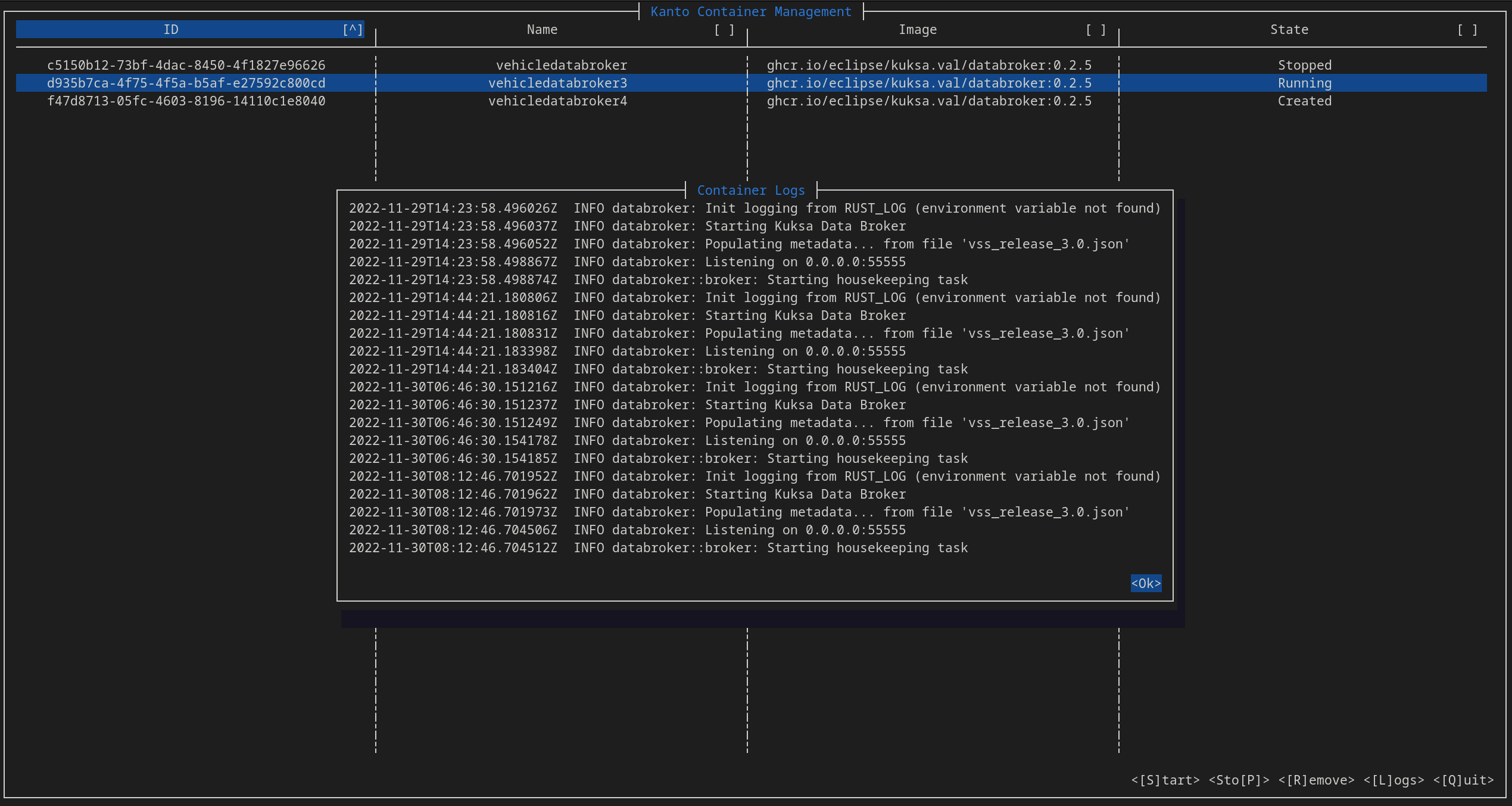

3 - Kantui

The k8s ecosystem comes with a lot of utilies that allow for the easier management of containers (such as k9s). The kantui util aims to be a “nice” text user interface that lets the user start/stop/remove/get logs of deployed containers in kanto-cm.

Development notes

This tool is again based on the ideas in Communicating with Кanto-CM via gRPC.

It spins up two threads - an UI thread (drawing/updating UI) and an IO thread (communicating with kanto-cm via gRPC). The communication between these two threads happens over an async-priority-channel with ListContainers request having a lower priority than Start/Stop/Remove/Get Logs (“user interaction”) requests.

This in an “eventually fair” mechanism of communication. That way even if kanto-cm is handling a slow request (such as stopping a container that does not respect SIGTERM) the UI thread is never blocked, allowing for a responsive-feeling UI. The size of the channel is 5 requests and the UI is running at 30 fps. Thus even if the UI gets out-of-sync with the actual state of container management it would be “only” for 5 out 30 frames.

Cursive and ncurses-rs

The cursive crate is used as a high level “framework” as it allows very easy handling of UI events via callbacks, though this might be prone to callback hell.

The default backend for cursive is ncurses-rs which a very thin Rust wrapper over the standart ncurses library. This in theory would be the optimal backend for our case as ncurses is a very old and stable library that has buffering (other backends lead to flickering of the UI on updates) and is dynamically linked (smaller final binary size).

The ncurses-rs wrapper however is not well-suited to cross-compilation as it has a custom build.rs that generates a small C program, compiles it for the target and tries to run it on the host. The only reason for this C program to exist is to check the width of the char type. Obviously, the char type on the host and the target might be of different width and this binary might not even run on the host machine if the host and target architectures are different.

After coming to the conclusion that the ncurses-rs backend was not suitable, kantui was migrated to the termion backend + the cursive_buffered_backend crate which mitigates the flickering issue.

[dependencies]

...

cursive_buffered_backend = "0.5.0"

[dependencies.cursive]

default-features=false

version = "0.16.2"

features = ["termion-backend"]

This completely drops the need for ncurses-rs but results in a slightly bigger binary (all statically linked).

Bitbake Recipe

The recipe was created following the guidelines in Generating bitbake recipes with cargo-bitbake and can be found in meta-leda/meta-leda-components/recipes-sdv/eclipse-leda/.

Future improvement notes

-

The gRPC channel can get blocked thus effectively “blocking” the IO-thread until it is freed-up again. Maybe open a new channel for each request (slow/resource heavy)?

-

Reorganize the code a bit, move all generic functionally in the lib.rs.